✍️✍️Configuring Hadoop cluster using Ansible:

What is Hadoop??

Hadoop is an Apache open-source framework written in java that allows distributed processing of large datasets across clusters of computers using simple programming models. The Hadoop framework application works in an environment that provides distributed storage and computation across clusters of computers. Hadoop is designed to scale up from a single server to thousands of machines, each offering local computation and storage.

What is NameNode?

NameNode stores all the information of the datanodes. When client stores data on DataNode ,NameNode gives the location of the data to the client.

What is a Data Node?

Data Node, also known as SlaveNode/EdgeNode. Data Nodes store data in a Hadoop cluster and is the name of the daemon that manages the data. File data is replicated on multiple Data Nodes for reliability and so that localized computation can be executed near the data.

For detailed understanding of hadoop cluster you can refer my below blog on hadoop.

Redhat Ansible

Ansible is an open-source automation tool, or platform, used for IT tasks such as configuration management, application deployment, intra-service orchestration, and provisioning. Automation is crucial these days, with IT environments that are too complex and often need to scale too quickly for system administrators and developers to keep up if they had to do everything manually. Automation simplifies complex tasks, not just making developers’ jobs more manageable but allowing them to focus attention on other tasks that add value to an organization. In other words, it frees up time and increases efficiency. And Ansible, as noted above, is rapidly rising to the top in the world of automation tools. Let’s look at some of the reasons for Ansible’s popularity.

Here we will configure the hadoop cluster with the help of Ansible.

Ansible Playbook

Playbooks are the files where the Ansible code is written. Playbooks are written in YAML format. YAML stands for Yet Another Markup Language. Playbooks are one of the core features of Ansible and tell Ansible what to execute. They are like a to-do list for Ansible that contains a list of tasks.

Playbooks contain the steps which the user wants to execute on a particular machine. Playbooks are run sequentially. Playbooks are the building blocks for all the use cases of Ansible.

So lets start…………..

Here, we need the inventory file which consists of IPs of systems which we have to configure as namenode and datanode.

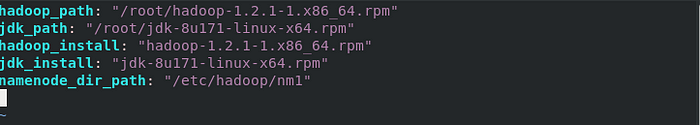

- First I have created here a separate namenode variable file which contains the path of softwares needed to install for namenode.

variables are inside namenode_var.yml file

This includes the hadoop and jdk softwares needs to be copied and installed in the namenode by ansible control node.

This also includes the path of namenode directory which needs to be created.

2. Now, we have here namenode.yml playbook which is needed to configure the managed node of ansible as namenode of hadoop.

- First we need to copy and download the jdk,hadoop softwares in the managed node.

- Now, create a namenode directory

- Configure the hadoop hdfs and core files

- Format the namenode directory

- Finally, start the namenode service.

- Check how many datanodes are connected.

Now, we have completed the configuration of namenode .

Now, we will configure the datanode…..

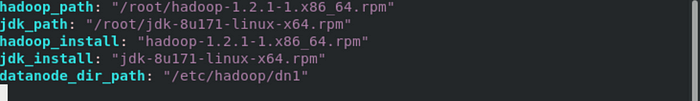

3. As namenode variables I have created a datanode_var.yml file which consists of all the paths for copying and installing the softwares.

This includes the hadoop and jdk softwares needs to be copied and installed in the datanode by ansible control node.

This also includes the path of datanode directory which needs to be created.

4. Now, we have here datanode.yml playbook which is needed to configure the managed node of ansible as datanode of hadoop.

- Firstly as usual we need to copy and download the jdk,hadoop softwares in the managed node.

- Now, create a datanode directory

- Configure the hadoop hdfs and core files

- Finally, start the namenode service.

Now, we have completed the configuration of datanode .

So, here overall setup completed!!

5. Now run the playbook in the controlled node by following command.

# ansible-playbook namenode.yml

# ansible-playbook datanode.yml

So, here we have completed the task of configuring the hadoop cluster using Ansible.

I hope you all will like my blog…..✍️

Do mention your comments about my blog.📌

Thanks to all in advance.😊😌😌